Connecting R53 With External Domains

Table Of Contents

In last post, I have discussed why and how I have passed AWS Solution Architect. In this post, we will see how I have connected Route 53 (AWS’ domain mapping service), to an external domain provider (Google Domains) and served a static website hosted in AWS S3. While this is not directly related to the Exam, but this can be one of the solution a solution architect is supposed to implement in real life scenarios.

I had taken my notes in markdown format, converted them in HTML and hosted them using S3 static website hosting. I wanted to point this using my own domain which is served with Google Domains. It wasn’t easy, it took multiple tries, that too without https to establish the whole setup. You can see the notes site, which is a subdomain to my main domain, and that too with HTTPS. Here are the steps where you can connect Route 53 with external domains provider and serve anything using CloudFront (in this post’s case S3 Static Website).

Steps to serve CloudFront using Route 53 and External Domains

- Connect Route 53 to External Domains

- Prepare S3 bucket for Static Website Hosting

- Getting certificate using AWS Certificates Manager

- Creating CloudFront distribution to serve the site.

- Connecting Route 53 to CloudFront to serve the site using external domain.

We will discuss each steps in detail.

1. Connect Route 53 to External Domains

AWS’ Route 53 is a service that allows us to register domains, just like Google Domains, Hostgator, GoDaddy or various other domain name providers. Apart from registering domains, Route 53 uses a facility called Hosted Zones. Hosted Zones are a facility where we can connect domains to various AWS services like EC2 instances, Load Balancers, API Gateways or static websites on S3. To connect Route 53 to external domains, we will use a public hosted zone.Do note that Hosted Zone in Route 53 is not free, while other services we are going to use charge little to no money.

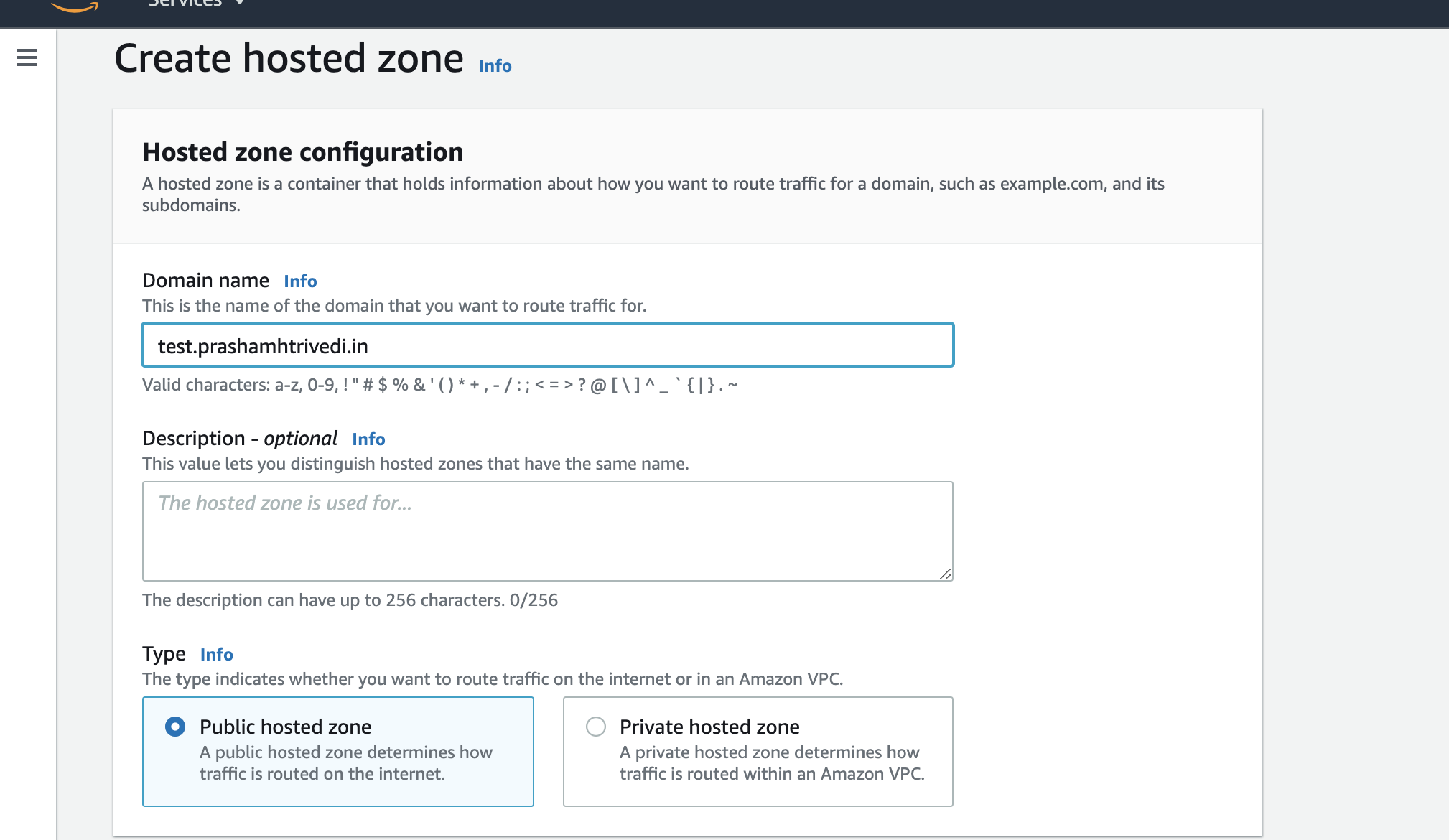

Create a public hosted zone, and in Domain Name field, enter your desired field with proper subdomains like below.

Create Hosted Zone

When your hosted zone is created, it creates two records by default. One is NS record, (which stands for Namespace records) and other is SOA which stands for Start of Authority record. NS record usually has 4 entries, copy them and go to your domain provider (In our case, Google Domains). Create a new custom record where value is your subdomain without main domain (like test in case of test.example.com). Type is NS and add all 4 entries against the record. If you did it properly, new entry should look something like below.

NS Entry in external domain provider

Now your external domain is ready to serve anything which can be served by Route 53 on your given subdomain. It’s now time to prepare S3 static website hosting.

2. Prepare S3 bucket for Static Website Hosting

For this step, I assume you already have a static website ready, with index.html and optionally error.html pages serves as entry point and error page respectively. When you have them ready, create a new S3 bucket with same name as your site. For example, if you want to serve test.example.com, create an S3 bucket with test.example.com, and make sure this bucket should be created in us-east-1 (N. Virginia) region, so that Amazon Certificate Manager can create a certificate for CloudFront to serve this bucket using https.

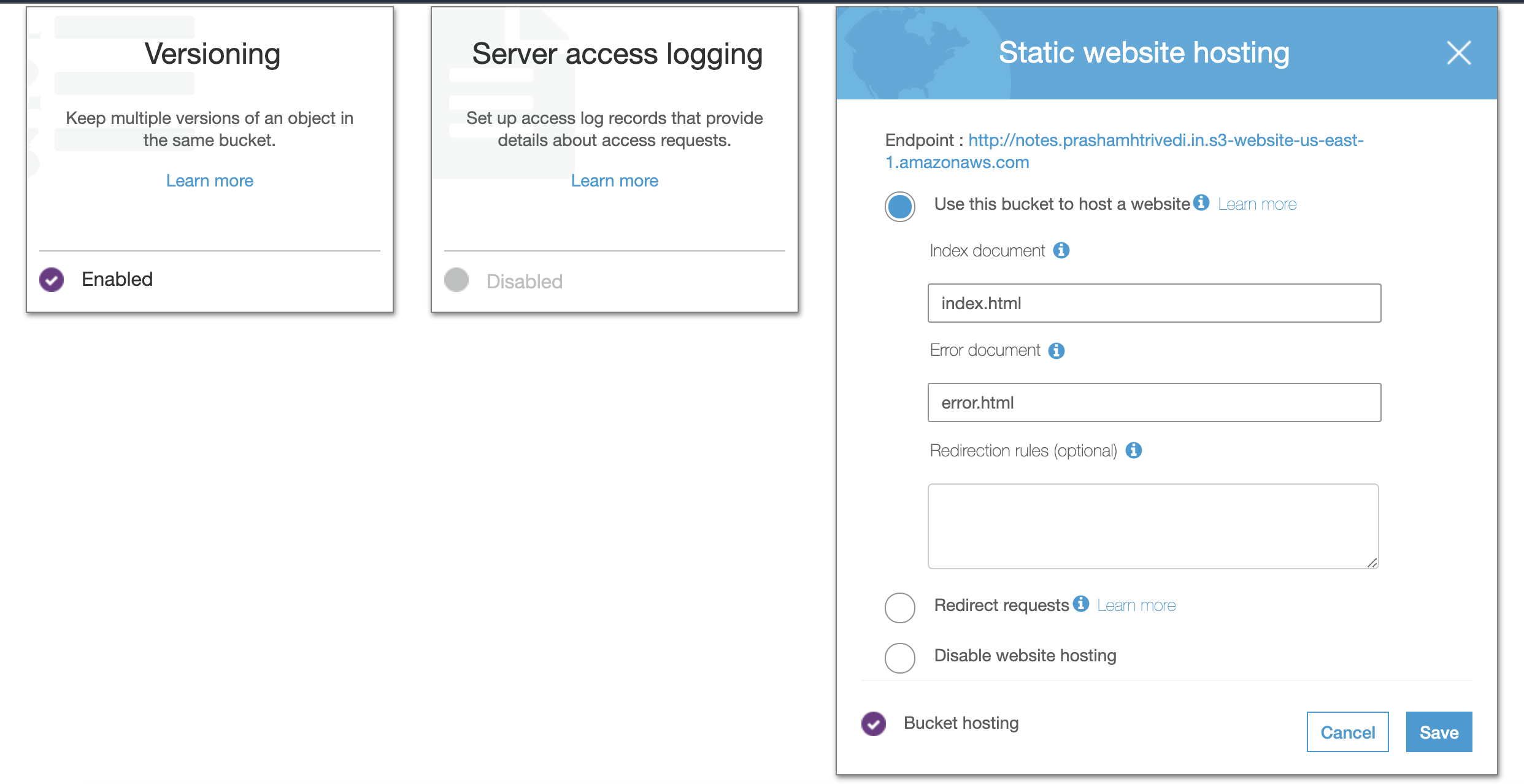

Once it’s created, you need to chage the settings of bucket to enable Static website hosting as well as versioning. For static website hosting option, you need atleast index.html page, which can serve as entry point, and error.html which serves as error page. If you don’t want to have separate error page, you can use index.html as your error page as well.

Bucket Options

To serve the site publically, you also need to disable all Block all public access settings. And you need to apply bucket policy to allow public read access. The bucket policy should be as below, where you need to replace notes.prashamhtrivedi.in with your own bucket name.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicRead",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::notes.prashamhtrivedi.in/*"

}

]

}

With these steps applied properly, you can browse your website using same address mentioned in Endpoint Address of Static Website Hosting block in Properties section. At this point, the site is ready to be served. But it is served using amazonaws domain and not using our subdomain. And to serve it using our subdomain, let’s move to next step.

3. Getting certificate using AWS Certificates Manager

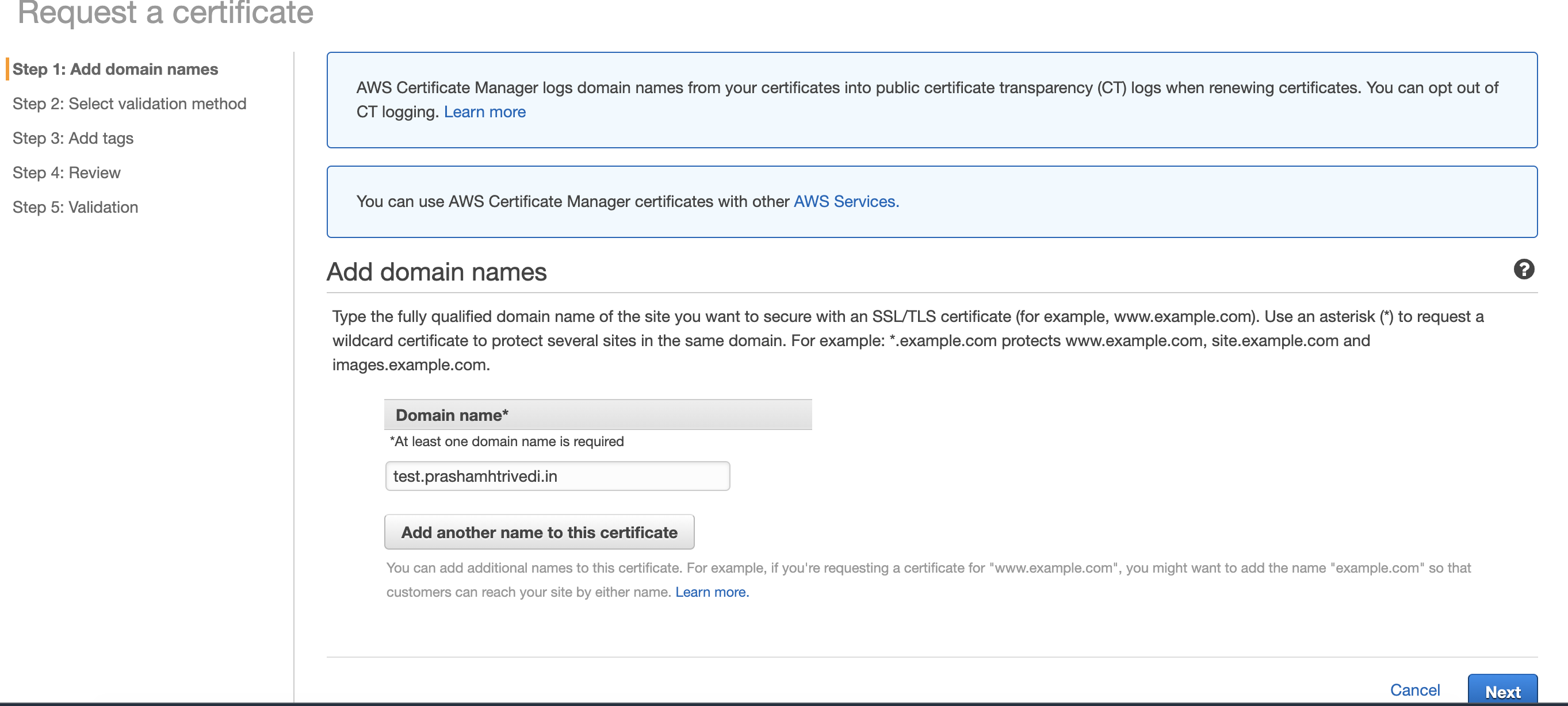

AWS Certificate Manager or ACM is a free service which issues SSL certificates to various AWS services. The certificates issued are valid for 13 months and automatically eligible for renewal. To get a certificate for the subdomain, go to AWS Certificate Manager, switch to us-east-1 (N. Virginia) region and request a Public Certificate. Enter the full subdomain for which you want to serve the certificate as shown below.

ACM Certification Entry Point

You can use same certification for multiple domains, you can also use wildcards, but I did not find any success using wildcards.

After entering your domain, you need to validate your domain ownership using either DNS Validation or Email Validation. In DNS Validation AWS asks you to create a CNAME entry in our domain using some data provided by AWS. Once you enter that data in your domain, AWS verifies ownership and issues us certificate. In Email Validation AWS sends an email to one of the following email addresses on your domain. This email contains a verification link and upon clicking that link, AWS verifies ownership and the certificate is issued.

The Email Addresses where AWS sends verification links are.

administrator@your_domain_namehostmaster@your_domain_namepostmaster@your_domain_namewebmaster@your_domain_nameadmin@your_domain_name

Email Validation does not take as much time as DNS Validation and can come handy if you don’t have access of your domain provider console. Also for Google Domains it’s easy to create Email Aliases, so I opted for Email Validation.

The next and very useful building block of the setup is CloudFront. And to setup CloudFront, let’s move to next step.

4. Creating CloudFront distribution to serve the site.

CloudFront is a very useful utility in AWS, it brings your read-only content closer to the end user, thus enabling speedy recovery. E.g. When someone watches a Netflix content anywhere from Gujarat, CloudFront stores it to nearest Edge Location (E.G. probably Ahmedabad or Mumbai), so that video can be consistently delivered with lowest latency. We are using CloudFront to serve our static site, hosted in N. Virginia, to anywhere in the world. Using secure HTTPS channel.

To use CloudFront using Amazon Certificate Manager, AWS requires Certification is issued in N.Virginia region and for seamless association between content and certification, the content should also be in N. Virginia region. For the sake of experiment I tried creating a bucket in Mumbai region but CloudFront had problem associating content and certification. So I moved the content to N. Virginia region by creating new bucket.

In CloudFront we will be linking S3 bucket we created in Step 2, restricting public access by removing policy stated in Step 2 so that it can only be accessed using CloudFront distribution. And we will link certificate created in Step 3 so that it can be served using HTTPS.

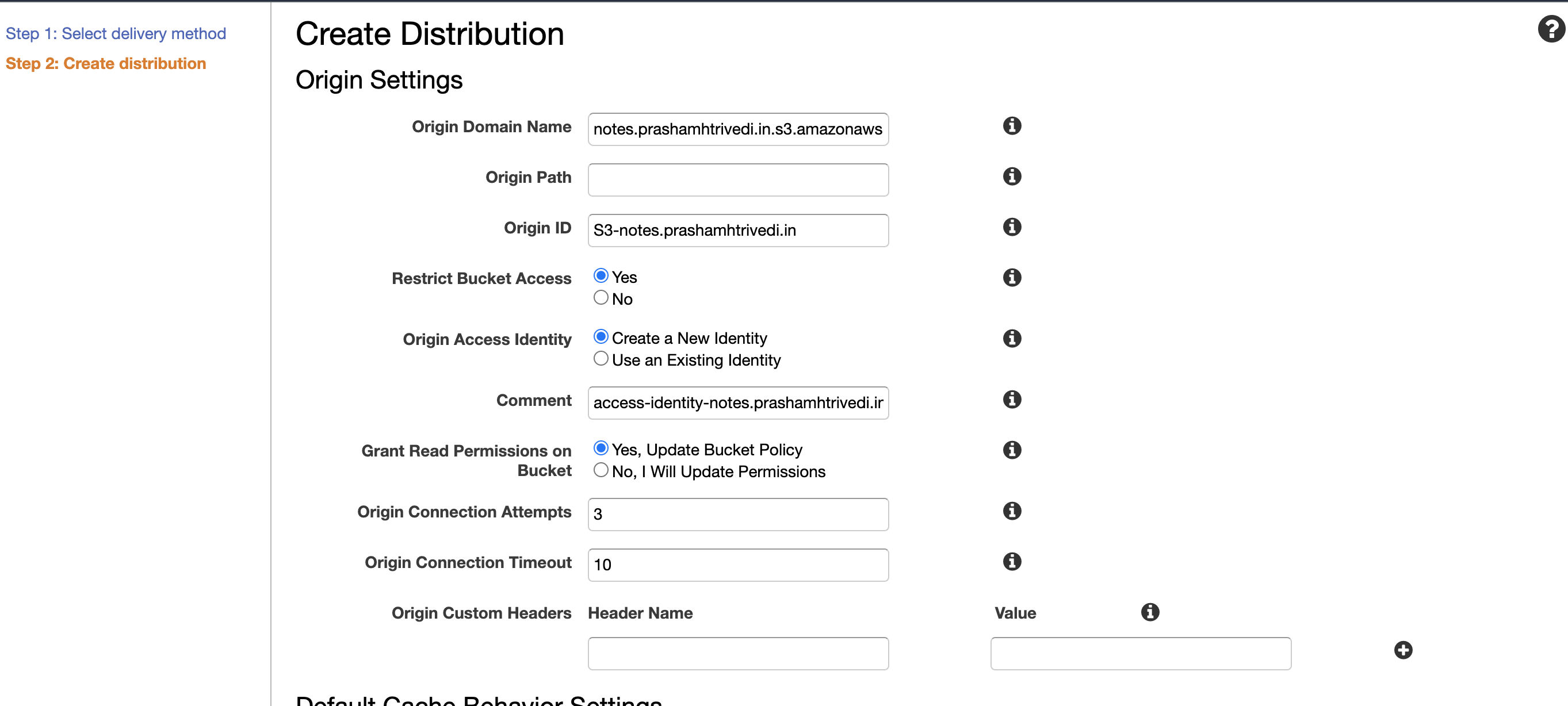

To link CloudFront with S3. Create a new distribution of Web type. In origin domain name, link S3 bucket from Dropdown, select YES radiobutton in Restrict Bucket Access, create a new Origin Access Identity (OAI) to restrict any other ways to access S3 bucket, update bucket policy automatically to the bucket.

Connecting S3 and restricting access

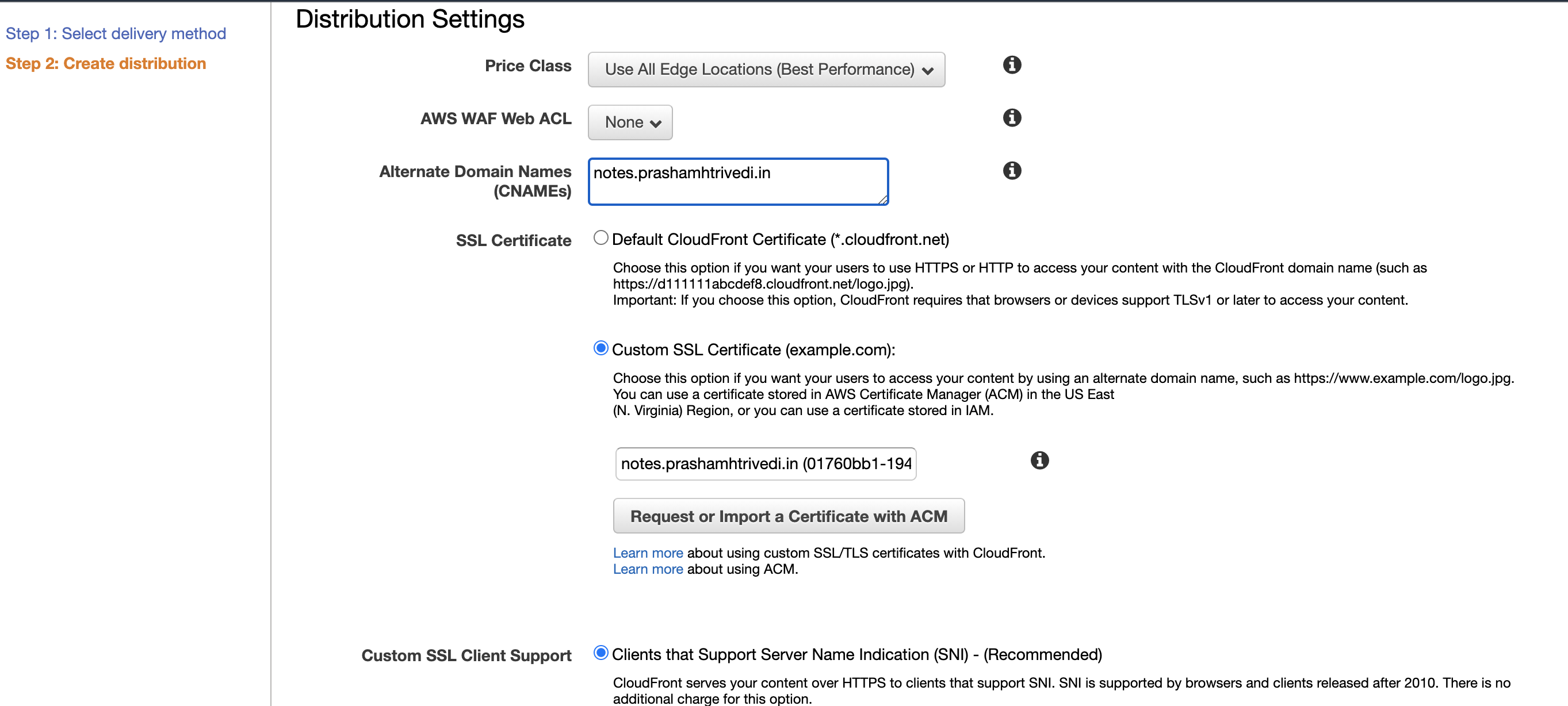

Add your full subdomain test.example.com to Alternate Domain Names (CNAMEs) section. To connect it with ACM Check Custom SSL Certificate option in SSL Certificate section. If your configuration upto this point is without any errors, you should see this radiobutton enabled and you can see atleast one entry of certificate (created in step 3) in dropdown as per below screenshot.

Connecting ACM with CloudFront

If you don’t see your certificate in Custom SSL Certificate list or you have the radiobutton disabled, do confirm that your content as well as certificate are located in us-east-1 first, and have other troubleshooting after it.

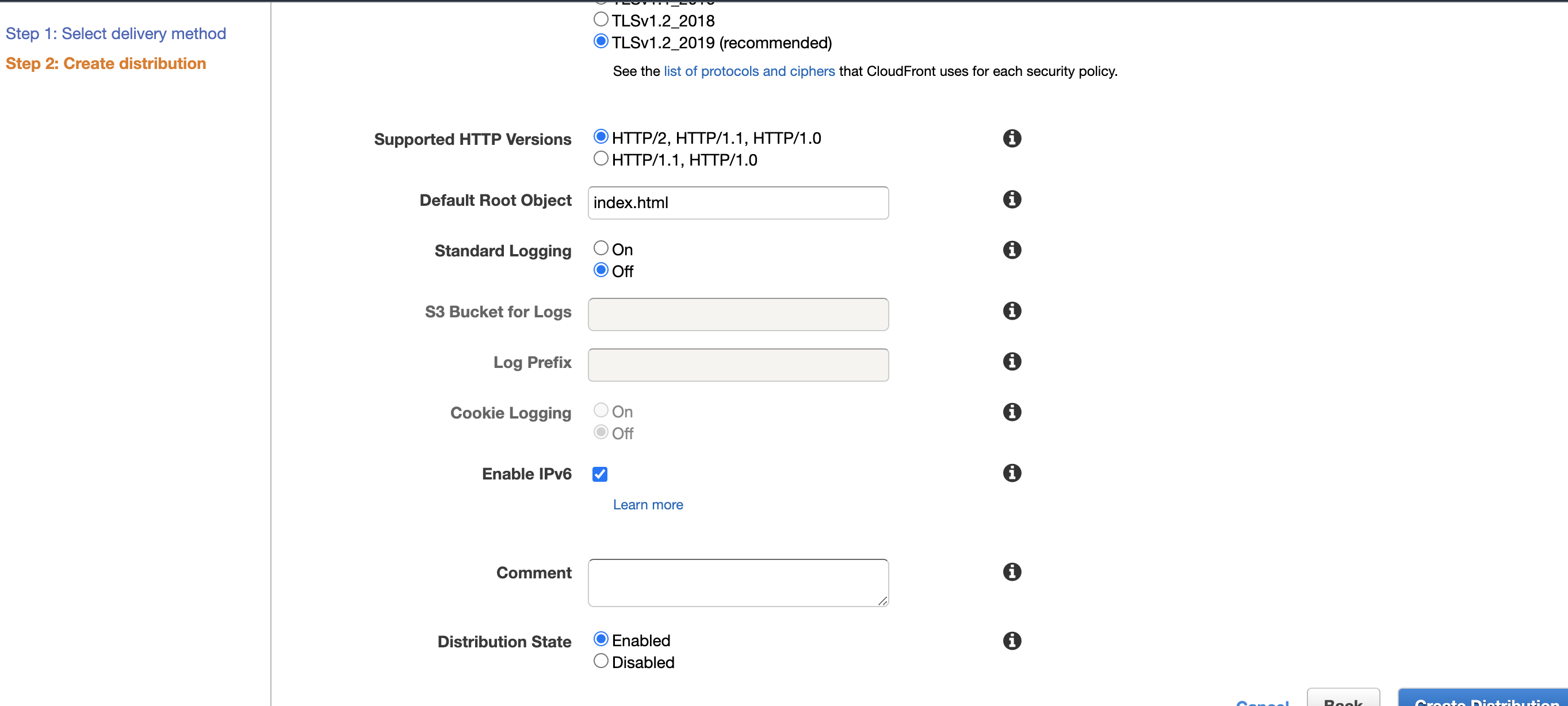

Once ACM Certificate is linked point to index.html of S3 bucket as Default Root Object without / anywhere in path. And leave other options with their default value, as per below.

Configuring S3

When we apply OAI restrictions, our bucket policy will look like below. Here we have restricted read access to Principal which points to CloudFront OAI. Again in below policy, you need to replace notes.prashamhtrivedi.in with your own bucket name

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "3",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::cloudfront:user/CloudFront Origin Access Identity EXXYYGRZZZTBUR"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::notes.prashamhtrivedi.in/*"

}

]

}

Once everything is configured, Click on Create Distribution, this CloudFront distribution creation takes around 15-20 minutes.

Once CloudFront is created, the content remains same in all distributions. To change the content, and see changed content we need to Invalidate the keys related to changed content, this is very tedious process and will take another 15-20 minutes to distribute everywhere. We can reduce that time while using S3 by enabling versioning, which takes around 10 minutes to distribute everywhere. That was the reason I asked to enable Versioning in S3 bucket.

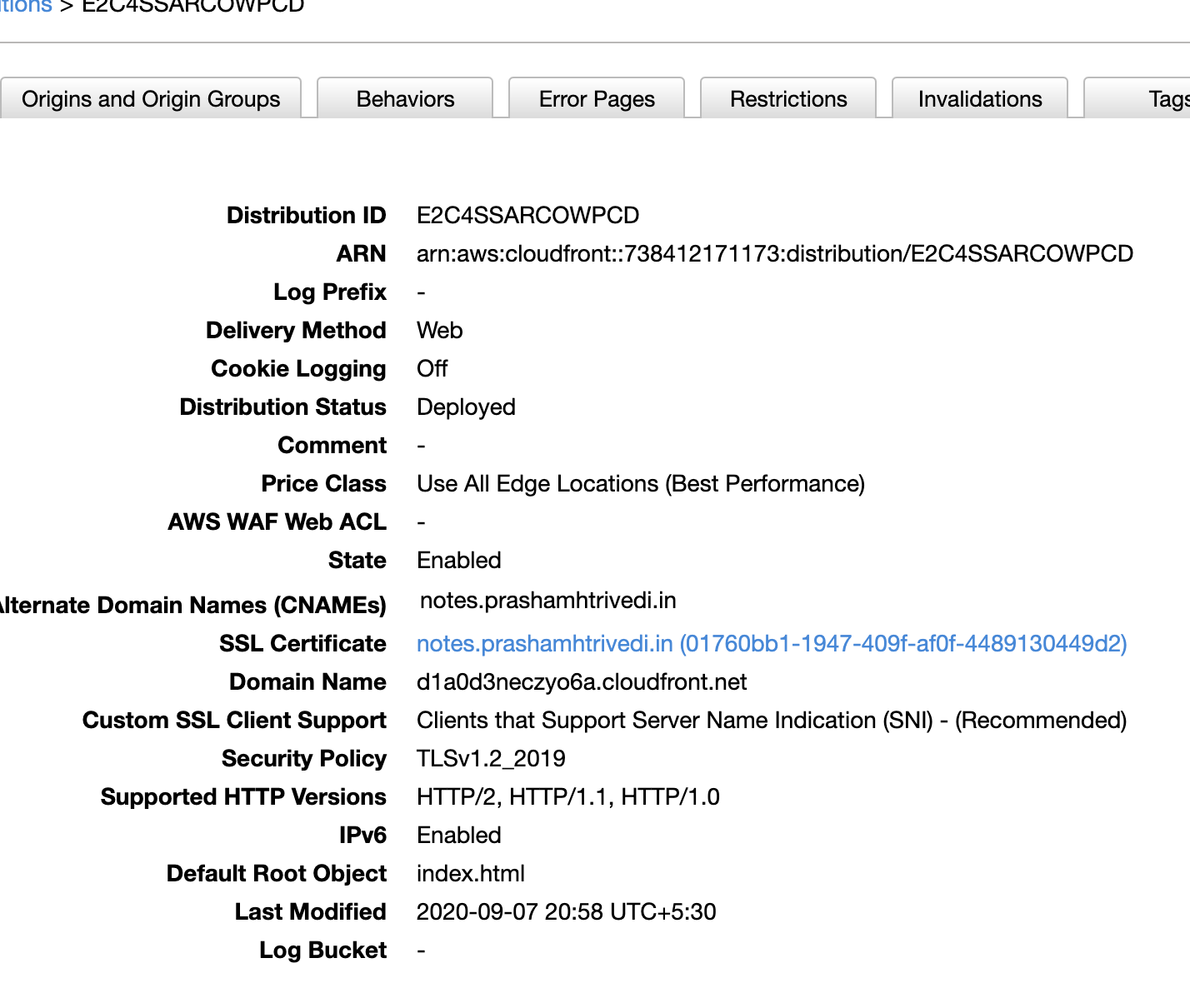

In the last step, we will link Route 53 to CloudFront distribution we have created in this step. Before moving to next step, note Domain name of CloudFront as per below.

CloudFront Data

5. Connecting Route 53 to CloudFront to serve the site using external domain.

This is probably the most easiest part in the whole process. At this point we already have Route 53 Hosted Zone connected with external domain. We already have static site hosted on S3 bucket, which is distributed by CloudFront and have SSL certificate that enables https.

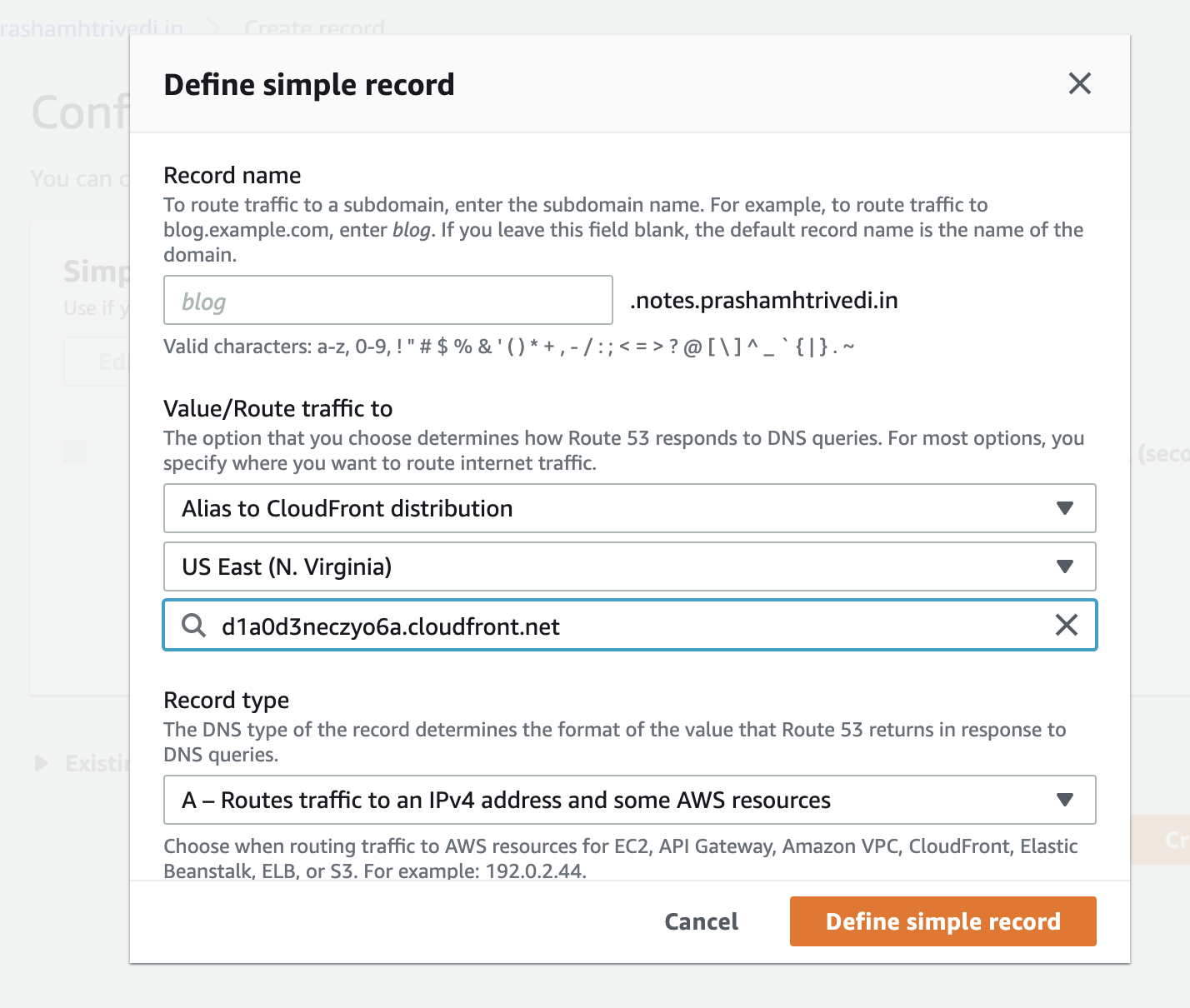

Now Create a record. When asked, use Simple Routing. In the resulting page, Define Simple Record, in resulting dialog, check Record Type should have A type record selected. In Value/Route traffic to section, select Alias to CloudFront distribution selected. Select US East(N. Virginia) region, and in below input (which should say Choose Distribution), select the domain of CloudFormation we have created in above step. The resulting dialog should have values like shown below.

Route 53 Data

Once you Successfully create the record, you have connected your S3 Static website using Route 53 with External Domain, served closer to your user using CloudFront and also having confidence of HTTPS using Amazon Certificate Manager.

This demo + explanation for S3 website, but using CloudFront we can create similar setup for Load Balancers, EC2, Media Package etc, and there is a change of configuration as per the origin we have selected. The rest of the things are same as described in this post.

I hope this has helped you to create a secure website using various components of AWS. In this post we have discussed how we have hosted a static website on S3, using external Domain Provider and HTTPS.

The website itself is written in Markdown, converted into HTML using a custom node script and generated HTML pages are sent using Github actions. We will dive deep into the notes site’s architecture in next post.