Gemini 3 Pro vs GPT 5.2: A Blind Test Reveals Code Red DNA

Table Of Contents

Same input, same instructions, very different results

You know that feeling when two candidates give you the same answer in an interview, but one clearly understood the question and the other just… covered their bases?

That’s exactly what happened when I ran a blind A/B test comparing Gemini 3 Pro vs GPT 5.2. Same system prompt. Same input. Same thinking level. No tools. The harness randomized which model was which—I only saw “Response 1” and “Response 2” until I unlocked the results.

What I found wasn’t just a difference in output quality. It was a window into how training circumstances shape a model’s personality in ways no prompt can override. (If you’ve read my thoughts on AI agent configuration debt, this will feel like a continuation of that story.)

The task: converting slash commands for sandboxed agents

I’ve been working on updating the slash command converter for Agent Config Adapter. The tool converts Claude Code slash commands into standalone prompts that can run in sandboxed coding agents like Jules or Claude Code Web.

Here’s the key context about these sandboxes: they’re Linux VMs with a git repo always checked out clean. No network access. Commands like gh, curl, git fetch/pull simply don’t work. The converted prompt needs to account for this—remove checks for things the sandbox guarantees, strip network operations that can’t execute.

The test input was my /startWork slash command—a complex workflow with branch management, checkpoint creation, and git status handling. Perfect for stress-testing how models handle explicit removal instructions.

Round 1: both models fail the same way

First round, both models preserved all the git repo checks and clean working directory detection from the original slash command. Stuff like test -d .git && echo "true" and stash handling. The sandbox guarantees these things, so the converted prompt shouldn’t include them.

I dug into why.

The system prompt had “preserve workflows” as a prominent first bullet under conversion rules. But “remove git status checks” was buried in a parenthetical in step 3. Meanwhile, the original slash command had these checks with headers, code blocks, procedural framing—high visual salience.

Both models saw detailed procedural content, saw “preserve workflows” front and center, and kept the checks. The removal instruction lost the tug-of-war.

Lesson learned: When your “preserve” and “remove” instructions compete for attention, the one with more formatting weight wins.

The fix: salience matching

I restructured the prompt. Added a MUST REMOVE section with equal formatting weight to the preservation rules. Used visual markers:

## ❌ MUST REMOVE (Sandbox Guarantees These)

- **Is this a git repo?** — always yes, never check

- **Working directory clean?** — always clean, no stash handling needed

- **Network operations** — `fetch`, `pull`, `gh` commands can't work

Equal visual weight. Equal chance in the tug-of-war.

Round 2: Gemini 3 Pro vs GPT 5.2 diverge

Same prompt fix, dramatically different results

Second round, the fix worked—partially. However, the results revealed something more fundamental than prompt structure.

Both models removed the explicit git checks. Good. But the outputs diverged dramatically.

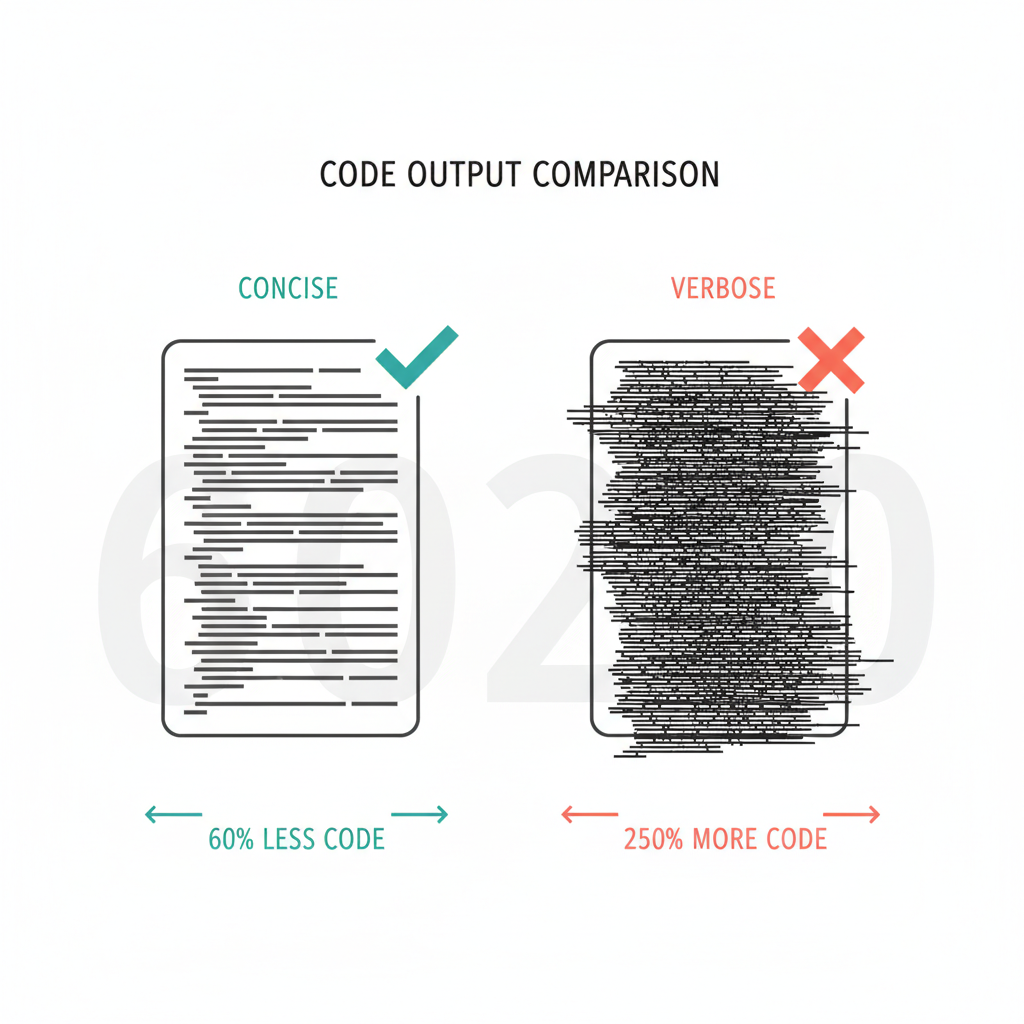

Model A: ~40 lines. Clean. Surgical. Exactly what the sandbox needed.

Model B: ~150 lines. Persona phrases still present (“You are starting…”) despite explicit removal instructions. Defensive hedging everywhere. And somehow fetch/pull snuck back in—the exact network operations the system prompt said to remove.

I revealed the identities.

Model A was Gemini 3 Pro. Model B was GPT 5.2.

Round 3: with tools, the gap widens

Third round, I enabled tools that inject skill and agent references into the converted output.

Gemini 3 Pro produced this elegant appendix architecture—main workflow up top saying “use the Checkpoint Validator,” then a Reference section at the bottom with the actual skill definition. Clean separation. ~100 lines total, maybe 40% is the appendix. Copy-paste ready. Two lines to tweak, max.

GPT 5.2 produced ~250 lines. Still had interactive user prompting (“Ask for confirmation only if needed”) despite explicit removal instructions. Defensive hedging throughout. A whole section re-explaining what NOT to do—which defeats the purpose of removing things.

The “Code Red” connection

I looked up the release timelines.

Gemini 3 Pro launched November 18, 2025. Topped LMArena with 1501 Elo. State-of-the-art across benchmarks. Google had time to ship what they built.

GPT 5.2 launched December 9-11, 2025. Fast-tracked. Originally scheduled for late December but pushed forward after Gemini dominated. OpenAI declared “Code Red”—Sam Altman marshaled resources, the release was accelerated.

The competitive panic is baked into the model.

GPT 5.2 was trained for “don’t miss anything,” “cover all bases,” “hedge everything.” That’s not a bug you can prompt around. That’s the model’s personality now.

The numbers

| Metric | Gemini 3 Pro | GPT 5.2 |

|---|---|---|

| Core workflow lines | ~60 | ~250 |

| Ratio | 1x | 4.2x |

| Sandbox violations after prompt fix | 0 | 2+ |

| Persona phrases removed | Yes | No |

| Interactive prompts removed | Yes | No |

| Network ops removed | Yes | No (fetch/pull) |

| Copy-paste ready | Yes | No |

| Tweaks needed | 2 lines | Full rewrite |

What this means for prompt engineering

Salience matching works to raise the floor, but can’t change the ceiling.

Gemini needed permission and clarity to cut. Once I gave it explicit MUST REMOVE sections with equal formatting weight, it cut surgically. The model was waiting for clear instructions. (This echoes what I found when comparing three AI agents on writing analysis—configuration and methodology matter as much as raw capability.)

GPT 5.2’s training prior was stronger than my explicit instructions. Consequently, I tried bold formatting, emoji markers, explicit MUST REMOVE sections. The model’s “Code Red DNA” found new ways to express defensiveness. Remove one hedging pattern, another appears.

Therefore, this isn’t a prompt problem. It’s a personality problem.

Why my ChatGPT usage dropped to zero

Six months ago, I stopped reaching for ChatGPT. Not a conscious decision—it just happened.

When I need code generation, I reach for Claude Code. When I need research or reasoning, I reach for Gemini. ChatGPT became the thing I opened once a month to check if I still had a subscription.

This blind test confirmed what my fingers already knew. Gemini output is a handoff artifact I can send straight to Claude Code or Jules. It’s usable. GPT output needs a full rewrite before it becomes usable—at which point, why did I involve the model at all?

The usage pattern writes itself.

The uncomfortable truth

Aanu kaai nahi thay—nothing can be done about this.

You can’t prompt-engineer around training circumstances. Code Red shipped a model optimized for benchmarks and PR recovery, not for people who actually read the outputs. The defensive hedging, the “cover all bases” approach, the inability to make surgical cuts—these aren’t bugs to fix with better prompts. They’re features of how the model was trained under pressure.

When you need a model that follows removal instructions, that makes clean cuts, that trusts the constraints you’ve defined—you need a model that had time to be trained for that. Not one that was fast-tracked to catch up.

May the force be with you…

Running your own model comparisons? I’d recommend blind A/B testing with the identities hidden until you’ve evaluated. It’s uncomfortable when your assumptions get challenged—but that’s the point. The usual channels work if you’ve got your own war stories.